Andy Edser

Curated From www.pcgamer.com Check Them Out For More Content.

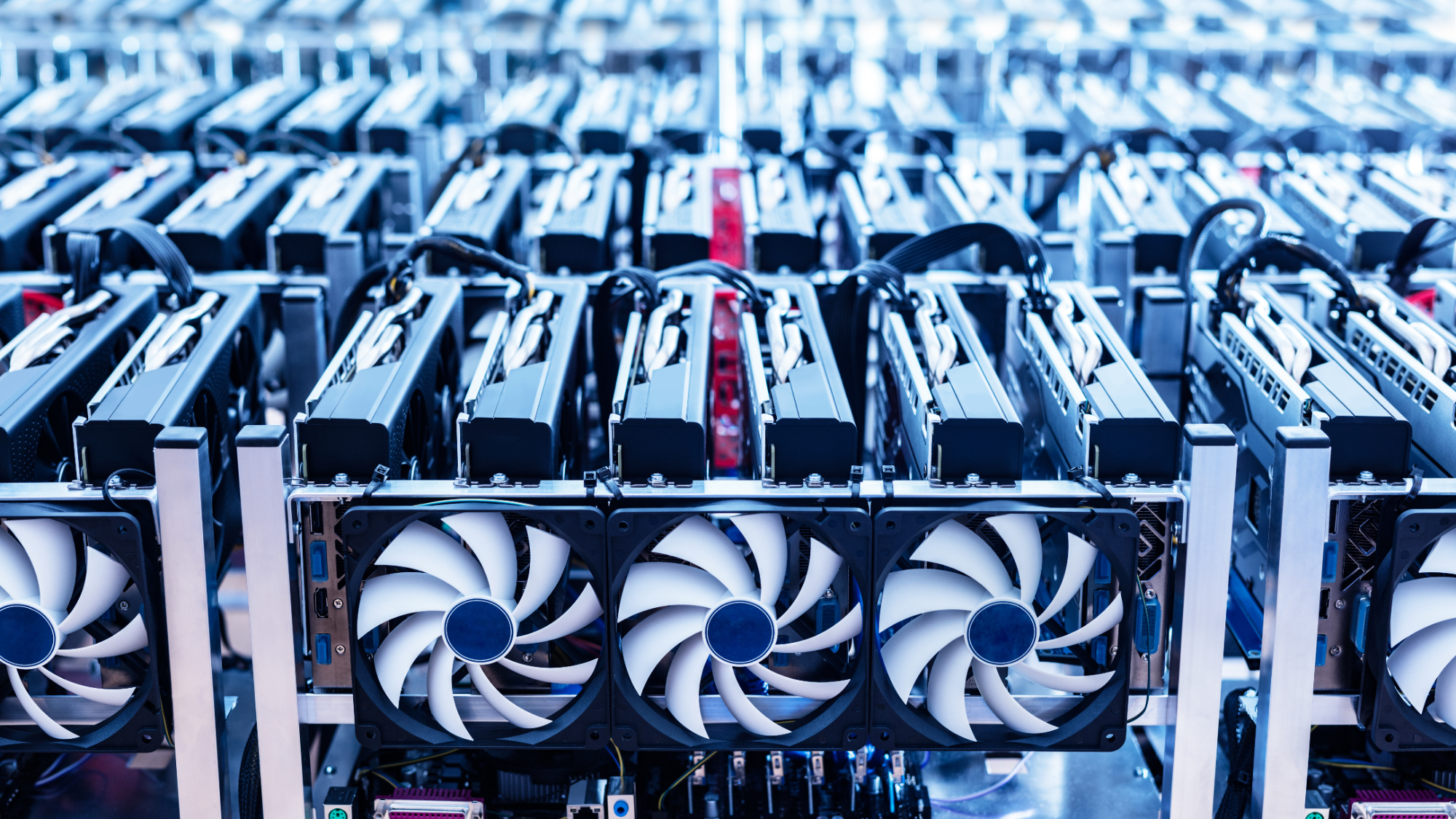

If you’ve got a powerful GPU in your machine, it may seem like a bit of a waste to leave all the computing power sitting in the background, doing relatively little at idle. In which case, Salad, a company that offers game codes, gift cards, and other items in exchange for users GPU resources, might seem like a reasonable way to put your machine to work.

However, by default the software settings opt users into generating adult content. An option exists to “configure workload types manually” which enables users to uncheck the “Adult Content Workloads” option (via 404 media), however this is easily missed in the setup process, which I duly tested for myself to confirm.

In the Salad Discord server, moderators maintain that the adult content workloads option is unchecked by default, and that any Stable Diffusion image generation job is automatically considered adult content. When several users asked whether they would generate less money on Salad with the setting off, it was explained that “There might be times where you earn a little less if we have high demand for certain adult content workloads, but we don’t plan to make those companies the core of our business model.”

Earnings created by renting out GPU time to Salad are redeemable in the store front, in which users can trade in their gains for a variety of rewards, including Roblox gift cards, Fortnite DLC skins, and Minecraft vouchers.

On the Salad website, the company explains that “some workloads may generate images, text or video of a mature nature”, and that any adult content generated is wiped from a users system as soon as the workload is completed. This fuzzy generalisation around sexually explicit content creation is likely because Salad is unable to view and moderate images created through its platforms, and is thereby covering its bases by marking all image generation as “adult” in general.

However, one of Salad’s clients is CivitAi, a platform for sharing AI generated images which has previously been investigated by 404 media. It found that the service hosts image generating AI models of specific people, whose image can then be combined with pornographic AI models to generate non-consensual sexual images. I’ll share the link to the investigation here, but as a content warning, the subjects discussed are very sexually graphic in nature.

A spokesperson for CivitAI told 404 that “Salad handles a portion of image generation depending on the time of day. We scale our service with them depending on the amount of demand.” Given the connection here, it seems highly likely that Salad’s users have been generating AI pornography for the site.

Well, this all feels remarkably grubby, doesn’t it? There’s something about the combination of AI porn generation in exchange for vouchers in games frequently played by children and young adults that really gets the hackles raised, particularly as the option to disable it is far from obvious.

The creation of non-consensual sexual imagery of real-life people strikes as particularly deplorable, and that in combination with the level of abstraction here means that many may well have been generating this content on their own machines with no explicit knowledge of what they were signing up for.

Gah, the internet can be gross sometimes. That’s about where I want to leave this.